Getting Started with SEO key concepts and understanding.

If you’re new to SEO it can be a bit tricky knowing where to start. There is a lot of contradictory information about SEO on the internet, which can make things confusing. This guide aims to provide a foundation of understanding covering how SEO works, as a high level overview. After reading this guide you’ll know what SEO is, have an understanding of how SEO works, know who’s involved with your website’s SEO and what influences how good your SEO is.

What is SEO?

SEO stands for search engine optimisation. SEO is what’s used to make your website appear nearer the top of page one in search engine results. How easily your website is found is a consequence of how effective your website’s SEO is.

What is a search engine?

Google, Bing and yahoo search are all search engines. You have probably used at least one of these in the past.

If we take Google for example, you type something in the search box, press return (or click the search button) and you’re provided with search results. The search results appear in a list, and there are usually multiple pages of results.

Where a website appears in the list of search engine results is referred to as its rank or position.

How does a search engine determine the order of sites in search engine results?

This is where things get interesting. Once you know how search engines determine where pages rank in search engine results, you’ll have gained an idea of how to improve your website’s rankings.

Before we get in to how search engines determine the order of sites in results it helps a little to understand the objective of search engines.

The objective of search engines.

Search engines are run by companies, and the objective of most companies is to make money.

Search engines make money by advertising. For this advertising to be something that’s easily sold, and that people want to buy, search engines need to have users (that’s you and I, and the other people that type things in search engines). The more users, the more people can be advertised to, and the more advertising can be sold.

Ask yourself: Why do I use one search engine rather than another?

You’ll probably find that the answer to that question is something along the lines of: Because the search results are better.

Consequently, a search engine gains more users by providing better results.

What is it that makes one result better than another? Relevance. By providing the most relevant web page at the top of the list, you’re more likely to find what you’re searching for.

If we add all of this up we get something like:

Search engines make more money as a product of providing the most relevant results.

Believe it or not, there was a time (pre 2000) when search engine results weren’t as relevant as they ware today. If you searched for something, you might have to visit several websites to find what you were looking for. Thus was time consuming and involved a lot of reading.

Then Google was launched. For the first time you could search for something, and you’d visit the site listed at the top of the search results, and you’d usually find what you were looking for. People were saying things like “I’ll never use another search engine again”.

Google achieved their objective of gaining users by providing relevant search results.

There’s no reason to care about which particular website is at the top of the search results. What’s cared about is relevance, but there’s more to it than relevance alone.

Relevance alone is not enough.

Imagine if you searched for “how to frogs breathe underwater?” and you found a scientific paper detailing oxygen transport in cutaneous respiration. It’s correct and very relevant, but it’s not very accessible to most people, and it’s not very engaging.

Now imagine that every time you searched you had to trawl through scientific papers, then do more searching just to be able to work out what they’re talking about.

You probably wouldn’t use that search engine very much would you?

Search engines need to lead you to engaging, easy to understand, relevant content to keep you as a user.

What do search engines use to work out relevance, engagement and ease of understanding?

The words on your website.

That’s right. Search engines read your website (very much like a human would) to work out how relevant it is, how easy the content is to understand and how engaging your site’s content is.

There aren’t any magic SEO tools that you plug in to your website and it automagically makes your SEO amazing (if these things existed, SEO would be moot, but I’ll come to that later).

If someone does SEO work on your website, what they’re doing is mostly changing the words, or where the words are placed. They’re doing this, because it’s mostly the words on your website that google reads to work out how your website should rank for certain search terms.

There is a bit more to it than the words alone, but without the “word” aspect being covered, everything else is moot. You can’t make any SEO magic happen if there aren’t any words.

How search engines read websites.

Search engines use crawlers to read websites. Sometimes these are referred to as spiders, bots, or robots. These are all computer programs that read websites.

Usually you’d request that your website be crawled (or indexed) using a webmaster tools account belonging to the respective search engine.

Search engines will eventually find a website of their own accord, but this can take a very long time. There are about as many websites as there are people on the planet, so it’s a bit busy.

When a crawlers is directed to your website it will look for a file called robots.txt. This file is put in the same folder as your website (the domain’s document root).

The robots.txt file usually contains instructions for the robot such as what it’s allowed to crawl and what it isn’t allowed to crawl, if it’s allowed to access the site at all, if it should add a delay to page requests, and most importantly: the location of your website’s sitemap.

The sitemap is another file (usually sitemap.xml) in the document root of your site. This file contains a list of your website’s pages.

By reading the robots.txt and the sitemap.xml the crawler has discovered instructions about what it can and can’t read on your site, and a list of pages it should read. The pages in the sitemap then get read.

Crawlers, when reading your site’s pages, will also follow links (unless links have the nofollow attribute – this tells crawlers not to follow the link) to discover internally linked resources and any external websites that your site links to.

What happens next varies according to the search engine in question.

Google records a copy of the crawled website on its own internal systems. Google also recrawls your site periodically, and keeps about 10 copies of your website. These 10 copies of your website is subject to Google’s algorithm, which again reads your site and does a kind of “giant word sum” on the site words to work out what your site is about, and how it should rank in results for certain search terms.

When I say “giant word sum” this is a mixture of logic and statistical processing. For example, if your site’s home page contains this text:

This is my dog spot. This website is all about spot, and the dog things he does. I love spot, he’s a good dog.

Google’s “giant word sum” does something like:

Dog = Three times

My/I = possessive = belonging

first-person subjective

Therefore:

Dog owner website about dog called spot

If search term:

Who owns a dog called spot?

Then: Site is relevant

or:

If search term:

Who owns a good dog called spot?

Then: Site is relevant ++

This is a massive over simplification of what’s actually going on, but it gives you an idea.

The “giant word sum” I’ve been talking about is what people call an algorithm.

To answer the “how does a search engine work out which site to list at the top of search results?”, it’s something like:

Search engine reads site multiple times, storing a local copy each time.

Algorithm is applied to stored copy of sites to determine subject matter, how accessible the subject matter is, and what search terms the subject matter is relevant to.

When search queries are submitted, search engines serve the most relevant site based on the product of the algorithm.

I daresay that the algorithm sounds interesting, but here’s the thing:

Search engine algorithms are kept secret.

Why search engine algorithms are kept secret.

Imagine if you had a magic wand, and you could point that wand at a website and PEW it’s top of page one!

If I had a magic wand like that I wouldn’t be sitting here writing blog posts I’d be PEWing everyone’s website to the top of page one and making a lot of money… or would I?

Given enough time and enough PEWing, everyone (that can afford it) is at the top of page one.

Search engines now have a problem:

- Page one is really really wide (as it lists loads of websites).

- Search results aren’t sorted by relevance any more, they’re sorted by who has the most money.

- The search engine has become barely usable due to the above.

- Search engine company has no users and cant make money.

I don’t have a magic wand, so that’s not going to happen.

The problem that search engines have, is that if they make their algorithms public, people game them and an approximation of the above takes place.

What I mean by “game them” is that people adjust their site to manipulate the algorithm in order to improve page rankings. This has taken place in the past.

Google used to use meta keywords as a ranking factor. These are words that you can add to your page’s code in a meta tags like this:

People started stuffing loads of keywords in their meta to rank better.

Google used to take backlinks in to account so link farms (pages that are just big lists of links) started appearing to make websites rank better.

Both of these are examples of how people have tried to manipulate Google’s algorithm.

Google don’t pay much attention to meta tags now, and they say that they don’t pay attention to backlinks, although not many people believe the latter. We don’t know for sure because the algorithm isn’t public. What we do know is that people can’t be trusted not to manipulate the algorithm. So the algorithm stays secret.

To learn more about backlinks and other technical topics involving backlinks, check out the backlinks forum.

Just as I have no magic wand, there’s no magic SEO tool that makes your website’s SEO amazing. If such tools did exist, everyone would use them, and the problem outlined above would occur. The SEO tools would then be moot (due to the problem above), so by existing these tools would negate their own validity.

A tool designed to make SEO better defeats its own purpose if it completely breaks how SEO works. If SEO doesn’t work, search engines have no users. Consequently, search engines are keen for these tools not to exist, which is why their algorithms are kept secret.

The effect of a secret algorithm.

Because the algorithm is a secret, people try and work it out by doing experiments.

If you’ve studied a science, you’ll now that experiments are usually conducted under controlled circumstances to prove or disprove a hypothesis.

Not all the SEO experiments are carried out under these circumstances, and how can they be, if the algorithm is unknown? The short answer is: They can’t.

Remember the 10 copies of your website that Google keeps? They’re likely to keep this due to a time factor. Google also openly state that freshness of content is a factor in ranking.

If you combine the time and the secret factors, you can’t do a very controlled experiment to work things out. At best you get an approximation, which is why there’s so much speculative, and contradictory information on the internet about SEO.

Google’s algorithm also changes over time, so what might have been a near accurate approximation on 2017, isn’t accurate today.

You might well be reading this and wondering how on earth effective SEO can be possible. I’ve often thought this myself.

There are, however some base rules you can follow, that will get you to a fairly good place when it comes to SEO.

SEO good practices.

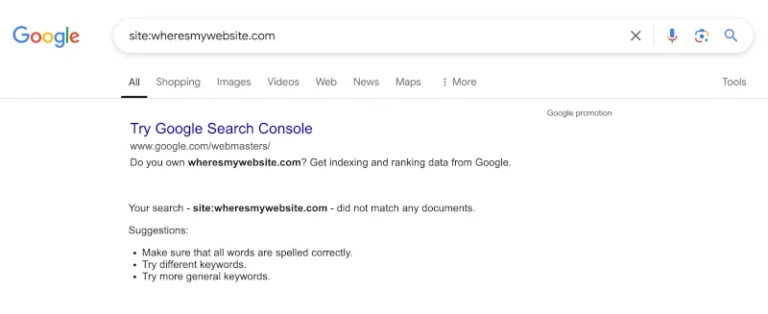

- Your website has to be crawlable. If it can’t be accessed by a search engine’s robot, your site will never rank.

- Webmaster tools accounts can be used to check websites are accessible to crawlers and provide some indication of what the problem is, if crawling isn’t possible.

- Cover the basics. Deploy a robots.txt and a sitemap.

- Content is king. You’ll never be penalised for writing engaging content. If your content keeps someone reading, and is interesting to someone, you’re helping search engines retain users.

- Don’t game the system. Even if you get results from doing this, they aren’t likely to persist. Search engines are really anti-manipulation, and make changes to prevent manipulation. If you’ve relied on manipulation to rank, your website is an algorithm update away from not ranking at all.

Further Reading.

An introduction to search engine optimisation gives you specific guidance on where the words on your website that you’d like to be more noticed should go.

Website analysis tools explains how you can use tools to check your site’s technical SEO and carry out keyword research.

Google’s SEO starter guide provides Google’s take on what you should be doing with your SEO.

Learningseo.io links to a lot of SEO resources to give you an end to end learning resource. It’s an epic amount of information, and involves a lot of reading. Anyone considering getting started with SEO would do well to start working through the linked resources. It’s one of the best centralised resources for leaning SEO.